Why Evaluation Matters

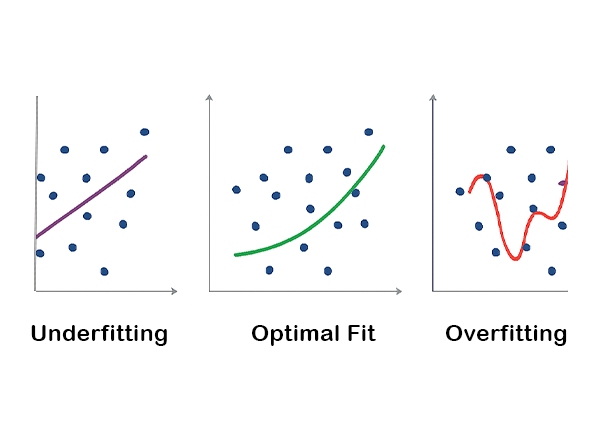

Proper model evaluation is critical for ensuring your AI performs reliably in production. It helps identify issues like overfitting, ensures fairness across different groups, and validates that your model is truly ready for real-world deployment.

- Avoiding overfitting and ensuring generalization

- Ensuring fairness and bias detection

- Production readiness validation

- Performance optimization insights

Our Testing Methods

Comprehensive evaluation approaches to ensure reliable model performance

Cross-validation

K-fold validation for robust performance estimatesSplit data into K folds, train on K-1 folds, test on remaining fold. Repeat K times for comprehensive evaluation.

Reduces overfitting bias More stable performance estimates Better use of available data

Holdout Testing

Dedicated test sets for unbiased evaluationReserve a portion of data exclusively for testing, never used during training or validation phases.

Unbiased performance assessment Simulates real-world deployment Final validation checkpoint

Bootstrapping

Statistical resampling for confidence intervalsGenerate multiple samples through random resampling to estimate performance distribution and confidence intervals.

Performance confidence intervals Uncertainty quantification Robust statistical analysis

A/B Testing

Live model comparison in productionDeploy models to subsets of users to compare performance in real-world conditions with actual traffic.

Real-world performance User impact assessment Business metrics validation

Key Evaluation Metrics

Industry-standard metrics to comprehensively assess model performance

Accuracy

Precision

Recall

F1-Score

ROC-AUC

Case Study

Fraud Detection Model Improved from 88% to 94% Precision

Problem

Financial institution's fraud detection model had high false positive rates, flagging legitimate transactions and frustrating customers.

Solution

Comprehensive evaluation revealed class imbalance issues. Implemented stratified sampling, precision-recall optimization, and threshold tuning.

Result

- Precision improved from 88% to 94%

- False positive rate reduced by 50%

- Customer complaints decreased by 65%

- Annual cost savings of $2.3M

Performance Metrics Comparison

Before vs After Evaluation & Tuning

Precision

Recall

F1-Score

50%

False Positive Reduction

$2.3M

Annual Cost Savings

Why Work With Us for Model Evaluation

Rigorous evaluation processes that ensure your models perform reliably in production

Comprehensive testing protocols

Complete evaluation using multiple methods including cross-validation, holdout testing, and bootstrapping for robust assessment.

Industry-compliant standards

Adherence to industry best practices and regulatory requirements for model validation and risk management.

Transparent reporting

Detailed evaluation reports with clear metrics, visualizations, and actionable recommendations for model improvement.

Bias and fairness assessment

orough evaluation for potential biases and fairness issues across different demographic groups and use cases.

Ready to Validate Your Model's Performance?

Let our evaluation experts ensure your model is production-ready and performs reliably in real-world scenarios.

- Comprehensive evaluation in 1-2 weeks

- Industry-standard metrics and methods

- Detailed reporting and recommendations